-

PyTorch "ShortFormer" - RoBERTa w/Chunks(kaggle study__2)자율주행스터디 2022. 1. 21. 11:29

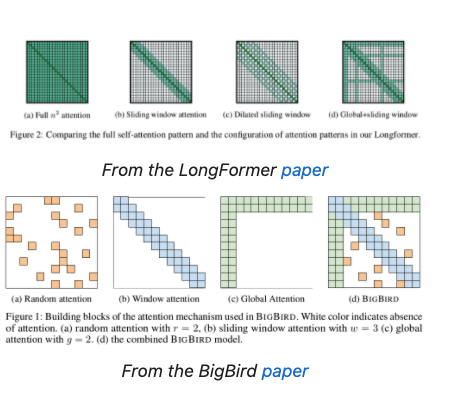

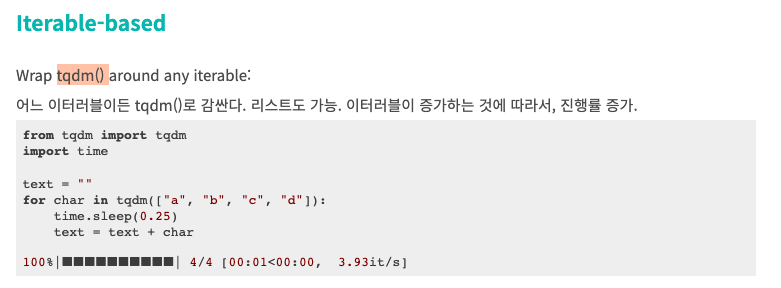

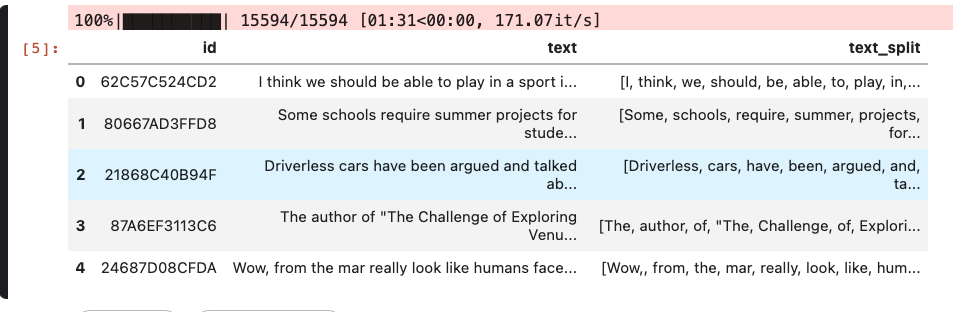

트랜스포머 모델은 훌륭합니다. 우리 모두는 그들을 사랑합니다. 그러나 Transformer 아키텍처의 핵심인 self-attention 메커니즘에는 메모리 측면에서 (적어도) 입력 시퀀스 길이에 따라 2차적으로 확장되는 행렬 곱셈이 있습니다. 이 연산은 비용이 많이 듭니다. 그리고 이것은 바닐라 트랜스포머 를 긴 시퀀스에서 금지하게 만듭니다. 이것은 우리가 지속적으로 보고 사용하는 BERT와 같은 모델에서 최대 512개의 토큰으로 이어집니다.import os import gc import time from tqdm import tqdm from collections import defaultdict import numpy as np import pandas as pd from sklearn.metrics import accuracy_score import torch from torch.utils.data import Dataset, DataLoader from transformers import AutoConfig, AutoTokenizer, AutoModelForTokenClassification os.environ['TOKENIZERS_PARALLELISM'] = 'false'# This is used to download the model from the huggingface hub MODEL_NAME = 'roberta-base' # Path where to download the model MODEL_PATH = 'model' # Max length for the tokenization and the model # For BERT-like models it's 512 in general MAX_LENGTH = 512 # The overlapping tokens when chunking the texts # Possibly a power of 2 would have been better # Tried with 386 and didn't improve DOC_STRIDE = 200 # Training configuration # 5 epochs with different learning rates (inherited from Chris') # Haven't tried variations yet config = {'train_batch_size': 4, 'valid_batch_size': 4, 'epochs': 5, 'learning_rates': [2.5e-5, 2.5e-5, 2.5e-6, 2.5e-6, 2.5e-7], 'max_grad_norm': 10, 'device': 'cuda' if torch.cuda.is_available() else 'cpu'}df_all = pd.read_csv('../input/feedback-prize-2021/train.csv') print(df_all.shape) display(df_all.head())# https://www.kaggle.com/raghavendrakotala/fine-tunned-on-roberta-base-as-ner-problem-0-533 train_names, train_texts = [], [] for f in tqdm(list(os.listdir('../input/feedback-prize-2021/train'))): train_names.append(f.replace('.txt', '')) train_texts.append(open('../input/feedback-prize-2021/train/' + f, 'r').read()) df_texts = pd.DataFrame({'id': train_names, 'text': train_texts}) df_texts['text_split'] = df_texts.text.str.split() df_texts.head()

**iterrows()는 데이터 프레임의 행을 반복하며 행 자체를 포함하는 객체에 덧붙여 각 행의 색인을 반환하는 제너레이터다. ... 예제의 경우 iterrows()는 행을 수동으로 반복하는 것보다 거의 똑같은 문제를 약 4배 빠르게 해결한다.**

DataFrame.iterrows() 메소드는 결과물로 (index, Series) 짝(pairs)을 반환합니다.

# https://www.kaggle.com/cdeotte/pytorch-bigbird-ner-cv-0-615 all_entities = [] for _, row in tqdm(df_texts.iterrows(), total=len(df_texts)): total = len(row['text_split']) entities = ["O"] * total for _, row2 in df_all[df_all['id'] == row['id']].iterrows(): discourse = row2['discourse_type'] list_ix = [int(x) for x in row2['predictionstring'].split(' ')] entities[list_ix[0]] = f"B-{discourse}" for k in list_ix[1:]: entities[k] = f"I-{discourse}" all_entities.append(entities) df_texts['entities'] = all_entities df_texts.to_csv('train_NER.csv',index=False) print(df_texts.shape) df_texts.head()**len 취하면 행 개수 나오므로

# Check that we have created one entity/label for each word correctly (df_texts['text_split'].str.len() == df_texts['entities'].str.len()).all()# Create global dictionaries to use during training and inference # https://www.kaggle.com/cdeotte/pytorch-bigbird-ner-cv-0-615 output_labels = ['O', 'B-Lead', 'I-Lead', 'B-Position', 'I-Position', 'B-Claim', 'I-Claim', 'B-Counterclaim', 'I-Counterclaim', 'B-Rebuttal', 'I-Rebuttal', 'B-Evidence', 'I-Evidence', 'B-Concluding Statement', 'I-Concluding Statement'] LABELS_TO_IDS = {v:k for k,v in enumerate(output_labels)} IDS_TO_LABELS = {k:v for k,v in enumerate(output_labels)} LABELS_TO_IDSid 에서 유일한 값 찾기

# CHOOSE VALIDATION INDEXES IDS = df_all.id.unique() print(f'There are {len(IDS)} train texts. We will split 90% 10% for validation.') # TRAIN VALID SPLIT 90% 10% np.random.seed(42) train_idx = np.random.choice(np.arange(len(IDS)),int(0.9*len(IDS)),replace=False) valid_idx = np.setdiff1d(np.arange(len(IDS)),train_idx) np.random.seed(None) # CREATE TRAIN SUBSET AND VALID SUBSET df_train = df_texts.loc[df_texts['id'].isin(IDS[train_idx])].reset_index(drop=True) df_val = df_texts.loc[df_texts['id'].isin(IDS[valid_idx])].reset_index(drop=True) print(f"FULL Dataset : {df_texts.shape}") print(f"TRAIN Dataset: {df_train.shape}") print(f"TEST Dataset : {df_val.shape}")**backbone 이란 자동모델을 만들어줌

def download_model(): # https://www.kaggle.com/cdeotte/pytorch-bigbird-ner-cv-0-615 os.mkdir(MODEL_PATH) tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME, add_prefix_space=True) tokenizer.save_pretrained(MODEL_PATH) config_model = AutoConfig.from_pretrained(MODEL_NAME) config_model.num_labels = 15 config_model.save_pretrained(MODEL_PATH) backbone = AutoModelForTokenClassification.from_pretrained(MODEL_NAME, config=config_model) backbone.save_pretrained(MODEL_PATH) print(f"Model downloaded to {MODEL_PATH}/") download_model()'자율주행스터디' 카테고리의 다른 글

PR-366: A ConvNet for the 2020s (0) 2022.01.24 PR-304: Pretrained Transformers As Universal Computation Engines (0) 2022.01.21 PR-243: Designing Network Design Spaces (0) 2022.01.21 자율주행스터디_2_0121(BiFPN) (0) 2022.01.21 자율주행 스터디_yolo_0117~ (0) 2022.01.17